I was actually having a pretty good conversation with the avatar interviewer. We had discussed my current work, my PhD thesis, and recent research developments I found compelling. It only took a few seconds to adjust to the reality: that this was the interview, that I would talk only to this avatar, that this avatar had accurately regurgitated some of the more arcane phrases related to particularly new research techniques that it probably hadn't heard before, that this avatar was steering this conversation in predetermined directions about as well as some human "recruiters" I'd spoken to. I thought back to previous conversations I'd had, at similar phases of similar job applications, and, honestly, this wasn't so different. "There's a formula," I'd been told, and it pretty much was that way. Now it had been formalized, finalized, and rendered.

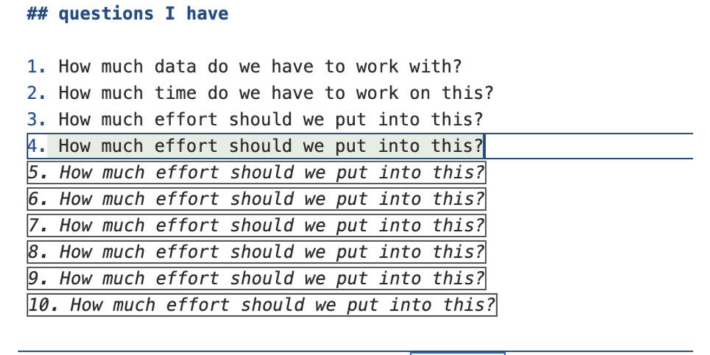

And then it asked me some questions I hadn't gotten before in an interview like this: What made a good multiple choice question? And if I were to write a multiple choice question, what would make for a difficult incorrect answer?

I thought of "the ultimate truth of jigsaw puzzles," according to French novelist and filmmaker Georges Perec. "Despite appearances, puzzling is not a solitary game: every move the puzzler makes, the puzzlemaker has made before,” he wrote in his most famous novel, Life: A User’s Manual, “every piece the puzzler picks up, and picks up again, and studies and strokes, every combination he tries, and tries a second time, every blunder and every insight, each hope and each discouragement have all been designed, calculated, and decided by the other." In a standard interview setting, bringing up Perec might have taken the conversation in a new and interesting direction, or revealed something unexpected about myself to the interviewer. I didn’t know much about interviewing with a robot, though, so I decided to play it safe and omit the literary reference.

I answered, instead, that I hadn't really thought about it before. It wasn't obvious to me that there was a straightforward mechanism by which good, incorrect answers could be generated—but then again, I hadn't spent much time thinking about it, and it seemed interesting. Necessarily, I added, the incorrect answer would have to be plausible: It couldn't be something that would be easy to rule out immediately. My thoughts started to spin: I thought of Perec, and what he would think of this interview; I thought of myself, and what this job would actually be like; I thought of the robot, and what I thought the robot might want to hear, which I realized was the wrong question after all, so I thought of whoever would sit on the other side of the robot, if anyone, and their thoughts, and their thoughts about me; I thought of the people that I might trick with my wrong multiple choice answers, and what they might be thinking about when they saw the question.

I realized I was rambling. I stopped. The avatar paused longer than it had after my other answers. I knew it wasn't thinking, but I didn't know what was happening. Where in the script were we?

It thanked me. I didn't know if I should thank it, because obviously this experience was being simultaneously lived by untold numbers of other people, or had been very recently, or would be very soon, and no one to be thanked had reviewed our footage yet, nor maybe would they ever. I chose to say "thanks" anyway, then self-consciously laughed, then closed the browser tab.

The next day, I received a job offer to work for xAI as a contract employee, via the intermediary hiring firm, Mercor, that conducted the interview. Onboarding would begin the day after.

My background is in academia. I was in grad school and various postdoctoral positions for well over a decade. I considered myself to be a top prospect on the faculty job market for half of those postdoctoral years. Each year there were around three faculty jobs advertised at R1 universities that were targeted towards my subfield. Accounting for "senior hires" (people who already have faculty positions who choose to leave them), this means that I had about 10 chances at getting a permanent job like the one I was trained for, and, despite making it to the final round of interviews several times, I was unable to get a faculty offer.

Nothing has a clean end. As my final postdoc wrapped up, I was offered a year-long postdoc at a top institution on the opposite coast, with the express purpose of earning one more try at the faculty market. But it would have meant moving cross-country, and likely a temporary separation from my spouse, followed by yet another move to yet another city—which, realistically, still might not have been my final destination. These are choices that academics make all the time, and that I had made a few times already. In the end, I decided that it was time to move on.

Instead, I accepted an offer in an adjacent academic field with a friend who is an expert in machine learning who had some grant money to spare. I had never taken a programming class and didn’t know a multi-layer perceptron from a random forest classifier, so the deal was that I would set aside my own curiosity-driven research objectives, advance my friend's research agenda, and learn some new techniques as I prepared for a career outside of academia. I was able to work remotely and choose where I wanted to live. I would be paid from what is called "soft money," but as long as nothing changed too fundamentally with the way grant money was appropriated, it felt like a safe, lateral move.

To do my new job, I would need to completely change how I wrote computer code. I'd written small pieces of code for numerical calculations for my own research in the past, but part of the purpose of my new position was to write code that would be accessible to other people. Specifically, they should be able to install our code, re-run our calculations to verify their validity, and then use that code as a building block in their own research. Learning how to turn code into a transferable and build-upon-able unit is called "packaging," and the way that this is accomplished across a wide range of academia and for-profit companies is through a collaboration and versioning software called Git. Making a package publicly accessible on Git is fundamental to the open-source ecosystem that facilitates a lot of computer-based academic research and, as you may have read recently, serves as a philosophical dichotomizing line between leading artificial intelligence labs.

The hope was that learning how to do these things—how to do machine learning in academia, how to turn those questions into useful new code, how to turn that code into a package, how to set that package loose in the wild—would be mutually beneficial for me and for my grant-winning friend. My new group would get my research ability (my skill in breaking a big question down into meaningful but more manageable chunks), hoping that this would be transferable across fields. I'd get mentorship in writing and packaging code, hoping that this would make me more hirable. To whom? In the back of my mind, I suppose I was hoping to get a job at a big tech firm.

Despite having the advantages of an accommodating boss and a network of friendly ex-academics in several of those firms, I couldn’t nail the dismount. The problem was that I was terrible at interviewing. Across many firms, the tech interview process has a consistent and legible structure: a screener interview, one or two coding rounds, and a behavioral interview. No aspect of it came naturally to me. For months, I couldn't even make it past the screener interview, until I watched some YouTube videos and realized that the prompt "tell me about yourself" that every screener asked me was not actually an invitation to tell them about myself, but rather a cue for me to tell a 90-second story whose culmination was my realization that my skills and interests aligned perfectly with the thing their company did. Getting a new job felt more like leaving my old career than choosing to do something different, and I took for granted that being broadly smart and adaptable would be sufficient to get something new—which is to say, the Perec anecdotes would have fallen flat with human screeners, too. The coding rounds were difficult in an entirely different way—brain-teaser type questions whose answers require clever methods that are taught to freshman computer-science majors, and which I was unable to figure out in the allotted time.

I only made it to the final behavioral interview once, but by that point I had been rejected by a sufficient number of tech companies that I was no longer interviewing for full-time jobs. Instead, this would be an hourly role (at a company that I’ll refer to as yAI, for clarity). I would in effect be put into an eternal interview cycle at yAI. If I performed well enough, I would have an opportunity to join the company on a permanent basis. But given the tenuous nature of the offer, and my continuing full-time job, it was going to be hard to impress them. How would I navigate the expectations of yAI and secure a full-time job without prematurely leaving my academic appointment? So, without telling the principal investigator of my academic job, I started to bill around 10 hours per week at yAI.

The contrasts with academic work were stark. Academic coding is very concerned with slow development, careful testing, and thorough documentation. Tech company work was fast and intentionally iterative in the sense that a first version was not expected to work comprehensively. This "move fast and break things" ethos felt, to me, like I was sending out something incomplete, but in my limited experience, at least, it remains a core part of tech development today. In the end, I decided that it would be worth it to make some extra cash, get some experience, and possibly land a permanent role, and I felt okay about the work I was doing for yAI. It wasn't inspiring, but it was at least somewhat interesting, and given the tenuous and impermanent nature of my academic job, I felt like I needed to go out on a limb a little bit to find my next opportunity. And, selfishly, I was able to learn some new things, both practical and conceptual, without feeling like I was making the world a substantially worse place. I would self-report my hours and keep my own timesheet. In a lot of ways the experience of work at the tech company didn't differ too much from my remote academic work, just at a slightly different pace and level of polish.

The work for xAI differed more substantively. The solicitation email told me that my LinkedIn profile caught their eye, and that I would have the ability to work with a "top AI lab." After passing the interview and signing the IP agreement, I was added to the xAI Slack along with what seemed like hundreds of other PhDs, some of whom worked a little more diligently than I did.

The task turned out to be translating questions from a PDF version of a textbook and its answer key. The workflow was as follows: I’d claim a problem number from a spreadsheet, look it up in the textbook—in several cases, replete with front matter and its admonition that it not be reproduced for any purpose without the express consent of the publisher. Then I’d determine whether or not the problem met a set of structural requirements: How many parts were there? Was there an unambiguous, quantitative answer, or was it more open-ended and qualitative? I’d enter the “translated” question into another portal, and repeat. In order to do all of this, I would need to be logged in on an xAI VPN and I would need to enable a program called Workpuls to record the contents of my screen. If I did all of that, I could log hours, but if I didn't interact with my computer for 10 minutes it would ask if I was "still working?" If I didn't reply within five minutes, it would erase the previous 15 minutes from my timesheet and automatically pause the timesheet software. This was a highly refined form of surveillance capitalism that I hadn't encountered before.

Why are they going through this trouble? The issue for "top AI labs" is training data. Large language models were trained on essentially all of the text of the internet—every Wikipedia edit, every Reddit thread, every Github repository. The chatbots borne from this trove of data are debatably good at regurgitating this information back to you, but I think it's impossible to deny at this point that they're doing something. Maybe their prose is formulaic, but writing code, to take one example, is formulaic, and the problems that come up in code writing are rarely unique or interesting on their own. Choosing what you want to say and how you want to say it is the knot that must be untied to produce quality writing. But there's a lot of writing out there that doesn't require quality. Putting all of the text on the internet into a blender and hitting "pulse" a few times doesn't irrevocably harm the production of an email response.

But going beyond the most formulaic writing will require something more, and none of these labs really know quite what that is. At least one direction they're going to try, of course, is training their models on expert writing. This is the project that I was drafted into closely surveilled gig work for: providing higher-quality grist for the AI mill.

Given the stultifying nature of the work, and the request from xAI that I devote at least 10 hours per week to the project, I chose to stop working that job. (I would like to pretend that it had to do with a certain awkward arm gesture and the dawn of the DOGE era, but I opted out slightly before those events.) Having been hired, though, my résumé was now in the system, much like being on file at a temp agency. So within a few days, I got an email that my "profile on the Mercor platform seemed like a great fit for a project we are starting next week with a leading AI lab." Intriguingly, the solicitation said that they were "looking for experts who are familiar with the frontier of ML research and are comfortable with reviewing and reproducing well-known papers." The pay was higher than the xAI project, and reading papers felt within the scope of my academic work, so I didn't feel too conflicted about trying this one out, too.

I clicked through to the job application. Within moments I was again speaking to a robot avatar, but I had adjusted to the new reality, and it felt much less disconcerting this time. This time, the stumper question was: What is my process when I am asked to be a referee in the peer review process for an academic journal? I considered what it wanted to hear, and I described something pretty close to the idealized form of what I'd like to do while refereeing.

Within a few hours I was asked to complete some online code tests. These were different from the coding tests I'd failed so many times before when I was applying to "real" jobs. Instead, I had to do some basic Git operations, and then I had to code up some basic machine learning functions. In order to log in and complete the tasks, I had to enable a new screen-sharing software. This one was called Loom and its logo was a panopticon. Upon successfully completing these challenges, I was added to yet another Slack for yet another large tech firm. (There were sufficiently few of us involved in this task that revealing the name of the firm would make me substantially more identifiable, so I will omit it here.)

In contrast with my first gig work experience at xAI, and the more commonplace tech work at yAI, this task was both interesting and difficult: I was asked to reproduce the results of a paper that had won an award at a recent conference. It was part of a segment of the literature known as "reinforcement learning," a fundamentally different type of machine learning algorithm than I've worked on before. I was supposed to reproduce the key findings of the paper, much as a referee would be asked to do for a journal or conference proceeding. I was challenged by the material and devoted several hours of my full attention to it, learning as I went. In contrast to the xAI gig, I think this job will culminate in something of a race pitting humans against a forthcoming "code agent." I was playing the part of humanity's coding representative.

Ultimately I was not able to find enough time to complete the task. They requested 20-40 hours per week, and I couldn't devote more than 10 hours to it, given my other obligations. I was lightly scolded by my Mercor manager when I said I had run out of time and wouldn't complete the work. Within a few hours, though, he asked if I would be available the following week instead. I continue to get emails from Mercor with "exciting new opportunities" and links to more interviews. Given the general air of uncertainty in federal grant funding, I don't know when I might need to follow up again.

All the while, I've held down my other jobs. My performance at my academic job has started to suffer, but I think my boss understands that we're all starting to feel the stress of the cloud of uncertainty hanging over our funds. My performance at my (slightly more) conventional part-time job is improving all the time as I get the hang of what they want me to do.

Part of that has involved my manager there encouraging me to use a coding assistant. This is an AI that is embedded in the application that I write code in. I've found it to be equal parts distracting, wrong, and helpful. The things that it's best at are things that I've written before in other parts of the codebase, and it saves me the time of looking for a different file, clicking to it, and copy-pasting; it's also good when I'm slightly changing a structure, such as renaming a variable, which it can automatically carry further without me needing to do a find-and-replace. In fact, it really seems to like copying, pasting, and duplicating.

But it is nevertheless true that AI can help with some of my work right now, because some of my work is formulaic and repetitive, and right now AI is better than some people at some things. That’s not really unfamiliar—your TI-89 was better than you at multiplication. But as the material fed into the AI training models becomes of higher and higher quality, and as the models and hardware continue to improve (and costs go down), that boundary will rise higher and higher, and it will pass through more and more of what we think of as human. Maybe someday I’ll decide to tell a robot my Perec story, and maybe it will understand the reference better than my colleague will (or, maybe, better than I do).

The rest of the story of AI will only get more complicated and knottier. Because the problem we face first will, perhaps obviously, not be the threat of AI becoming a superintelligence that is better than all people at all things; and the important discussion to have is not whether or not to let that happen, nor how to put the just right English on the ball while we still have it in our grasp to ensure its trajectory curves towards beneficence rather than malevolence. In fact, I think those problems are convenient distractions from the real problem. The real problem, the one we will face first, whether we want to or not, and perhaps very soon, will be: Are we prepared for what will happen when AI becomes cheaper than most people at most things? The future might not be very human; it might not even be in the future.